Google, founded in 1998 by Larry Page and Sergey Brin, was based on their research paper published at Stanford University titled “The Anatomy of a Large-Scale Hypertextual Web Search Engine”. In this paper, they outlined their concept for a new way of ranking website pages in a search engine, called PageRank. This research paper formed the conceptual basis for the Google algorithm today, even with the many changes over the years.

Since the time Google became publicly accessible, webmasters have been trying to manipulate Google search listings to get their websites to rank at the top. This practice came to be known as Search Engine Optimization, or SEO for short.

As the Google algorithm has changed, practices for SEO have developed and changed. These practices may fall into whitehat or blackhat categories, measured based on their purpose to either work with or manipulate the PageRank system. In this blog, we will identify the four primary stages in the history of SEO and explain how the algorithm has evolved over that time.

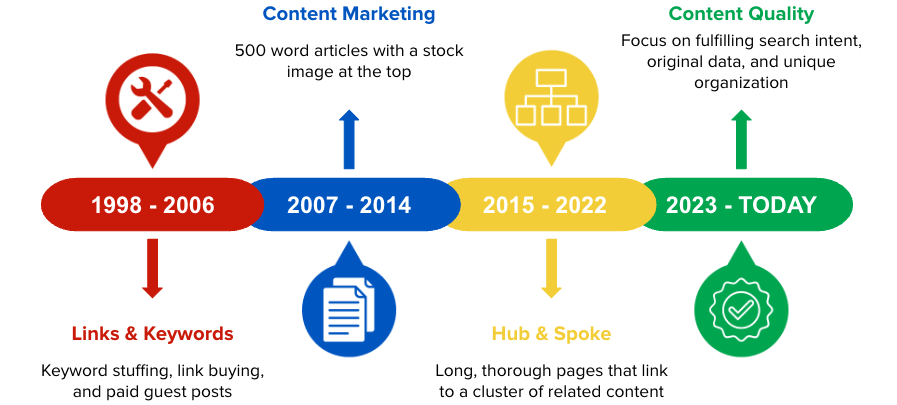

| SEO Era | Timeline |

Characterized By |

| Links & Keywords | 1998 – 2006 | Keyword stuffing, link buying, and paid guest posts |

| Content Marketing | 2007 – 2014 | 500-word articles with a stock image at the top |

| Hub & Spoke | 2015 – 2022 | Long, thorough pages that link to a cluster of related content |

| Content Quality | 2023 – Today | Focus on fulfilling search intent, original data, and unique organization |

Links & Keywords

Timeline: 1998 – 2006

Characterized By: Keyword stuffing, link buying, and paid guest posts

The first Google algorithm was designed to organize the web in the same way as academic research papers are organized. When searching for a research paper, one would consider two things.

- The keywords in the research paper

- The number of citations for the research paper

Authors of academic papers would select keywords for their paper, as a means of categorization for researchers to find the information needed. So, if I were to write an academic paper on Shakespeare, I may select “Shakespeare”, “old English poetry”, and “Romeo & Juliet” as my keywords to help others find my paper.

Keywords in the Original Google Research Paper

The number of citations for a research paper indicated the quality of the paper. If 50 academic papers cited Paper A, and 200 cited Paper B, the assumption is that the information in Paper B is of higher quality, more thorough, etc.

This is how pages were originally ranked on Google. By placing a keyword you wanted to target within the keyword tags and getting links on as many other websites as possible, no matter the website, you would rank highly. SEO was purely a numbers game of who could get the highest number of links.

Common practices included spamming links in forums, paying websites for links, and setting up secondary websites for the sole purpose of linking to the main site. This was the wild west of SEO, and those days did not last long.

Content Marketing

Timeline: 2007 – 2014

Characterized By: 500-word articles with a stock image at the top

Through a series of updates from 2004 to 2007, Google became more advanced in its algorithm. They no longer allowed spammy backlink strategies to allow subpar pages and websites to rank. These updates turned all of the previously discussed strategies ineffective overnight. Any irrelevant link was disregarded, so only links from contextually relevant sites counted.

Common SEO practices still used today such as guest posting became virtually irrelevant in this period, leaving many SEOs stuck in the past. Google realized that in order to grow in users, they must grow in the number of results they can provide to various search queries. Enter the period known as “Content Marketing”.

In this period, Google rewarded any content creation without much regard for the true quality of the content. It was a period of “content quantity over content quality”. Websites could simply create blog articles of ~500 words with a stock photo at the top, and Google would drive incredible amounts of traffic to the site as they made up the deficit between content that users were searching for and content available to show as a search result.

Backlinks were still important in this period but in a different way. Backlinks only counted if they were contextually relevant to the site. Google began weighing backlinks more that were contained in the body of the content on a page and if the link was contained on another highly trusted website. So, a link from Apple would be worth exponentially more than a link from a brand-new blog. The algorithm began identifying link-building tactics like guest posting and devaluing the links.

To go back to the original idea of Google, this makes sense as a development. A citation from a world-renowned MIT physicist may carry more weight than a citation from a graduate student at any random school. The algorithm was getting closer to its original intent: show the most satisfying response to the search intent of the user.

Hub & Spoke

Timeline: 2015 – 2022

Characterized By: Long, thorough pages that link to a cluster of related content

Around 2015, the internet was beginning to “fill up”. Tens of thousands of blog sites were releasing mass amounts of content, and it became clear that there would need to be a greater weight placed on the quality of that content in the search results. To do this, a concept called “niche expertise” was introduced.

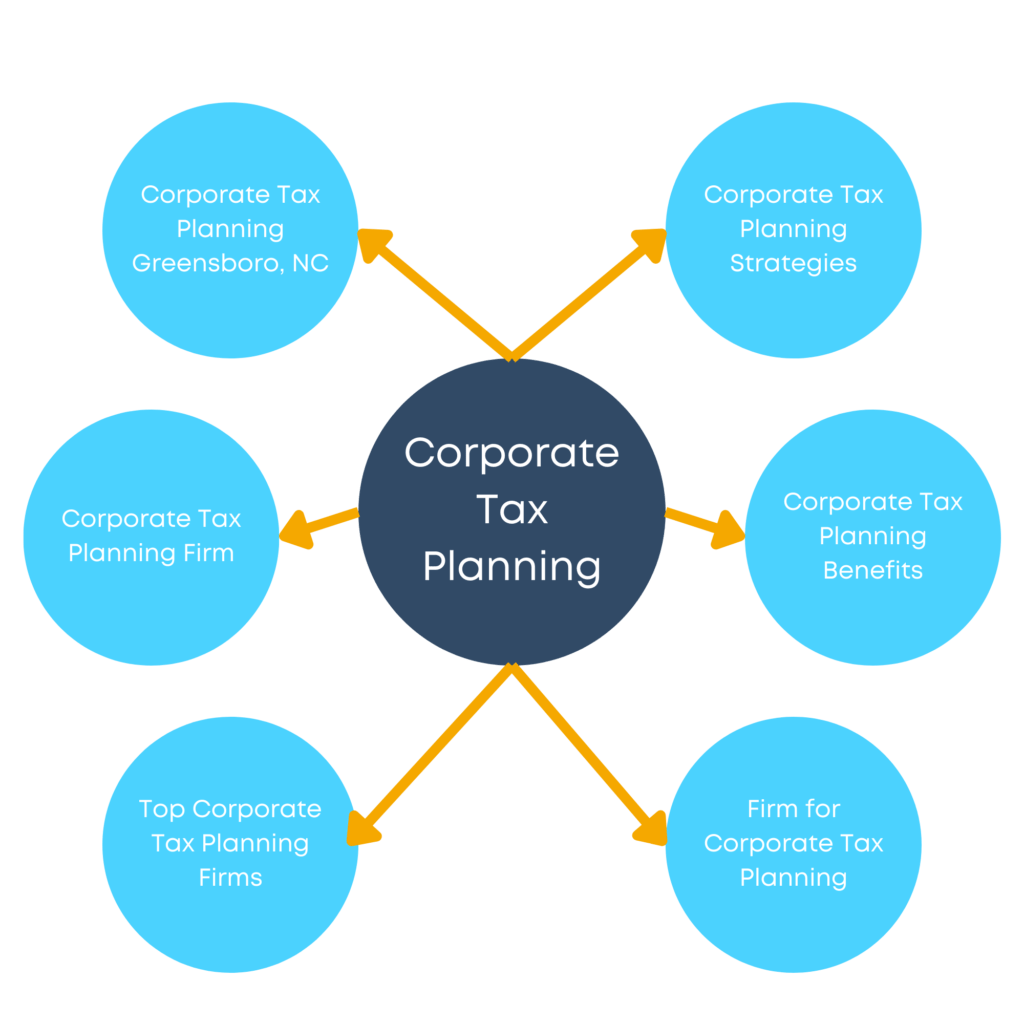

The concept of niche expertise said that a website with a high amount of original content focused on a narrow topic area could outrank a more trusted website because it demonstrates true expertise. Marketers began creating hub pages, long thorough pages covering broadly all aspects of a topic, with links to sub-pages (“spokes”) that covered each aspect in much more depth.

Here is how this looks conceptually:

Fun Fact: Before Hubspot was a CRM company, they were focused on SEO and popularized the hub and spoke concept.

It was in this period that Google began devaluing backlinks in its ranking algorithm. The robots now could crawl website pages and determine the quality of the content. Backlinks remain important, even today, but they do not carry as high of weight as they used to. A certain number of backlinks may need to be considered to rank for a keyword, but content quality metrics allowed smaller sites to rank for keywords that used to be reserved for the larger, more trusted companies.

Content Quality

Timeline: 2023 – Today

Characterized By: Focus on fulfilling search intent, original data, and unique organization

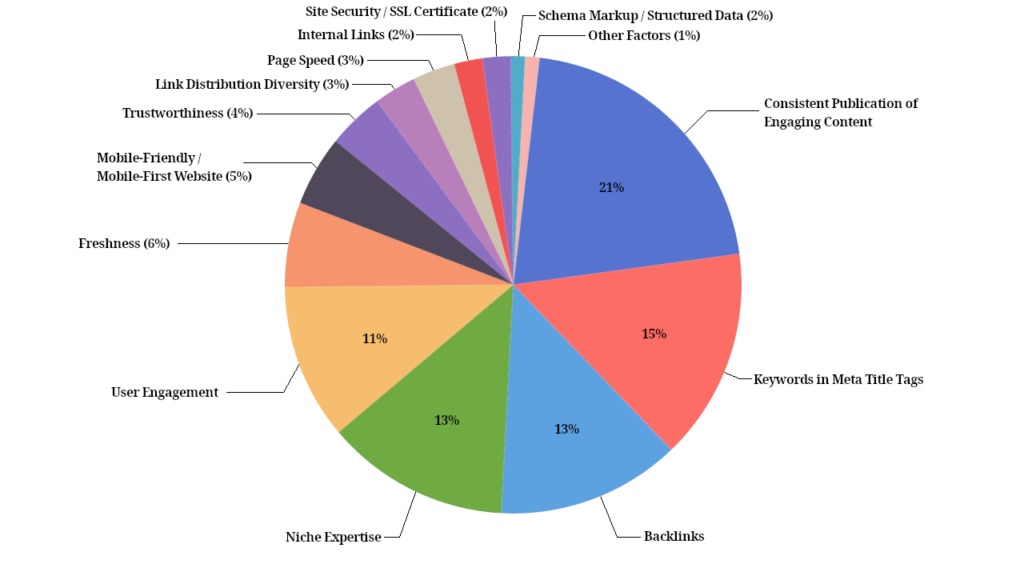

Today, the Google ranking algorithm looks like this:

Source: First Page Sage – The 2024 Google Algorithm Ranking Factors

The factors that relate specifically to content quality – consistent publication of engaging content, niche expertise, user engagement, and freshness – make up 51% of the ranking algorithm. Niche expertise remains critical, but we have evolved one more step. Today, Google has an algorithm that is so advanced that they can identify who truly has the highest quality content, and more importantly, whose content will best satisfy the search intent of their users.

This is where Google was always meant to be: a mechanism of pairing a searcher with the website page that will best satisfy their search intent.

Further Reading

To learn more about SEO and the Google algorithm, we recommend the following resources:

- Google – How Search Works

- Search Engine Land – Unpacking Google’s Massive Search Documentation Leak

- First Page Sage – The 2024 Google Algorithm Ranking Factors

If you have any questions, please reach out on our contact page.